Understanding MDB (Modular Databases) on NCS5700 systems

Preface

In Today’s Network deployments we position our routers in various roles pertaining to different use cases. And it comes with it’s own interesting set of features and importantly different scale requirements.

So how to deploy the same product addressing these different scale requirements?

We bring in that flexibility in the form of MDB (Modular Database) profiles in our NCS5700 Fixed systems and NC57 Linecards on the modular NCS5500 router operating in Native mode.

Introduction to MDB

In the NCS5500/NCS5700 routers we have various on-chip databases for different purposes like storing the IP prefix table, MAC information, Labels, Access-list rules and many more. On the scale variant we have an external-TCAM (OptimusPrime2 in NCS5700 family) to offload storage of prefixes, ACL rules, QOS, etc for a higher scale compared to the base systems.

We also have the on-chip buffers and off-chip HBM buffers used for queuing the packets. They are out of the scope for the MDB discussion.

Our focus of discussion will be on the memory databases available on the Jericho2 family of ASICS.

Jericho On-Chip Databases

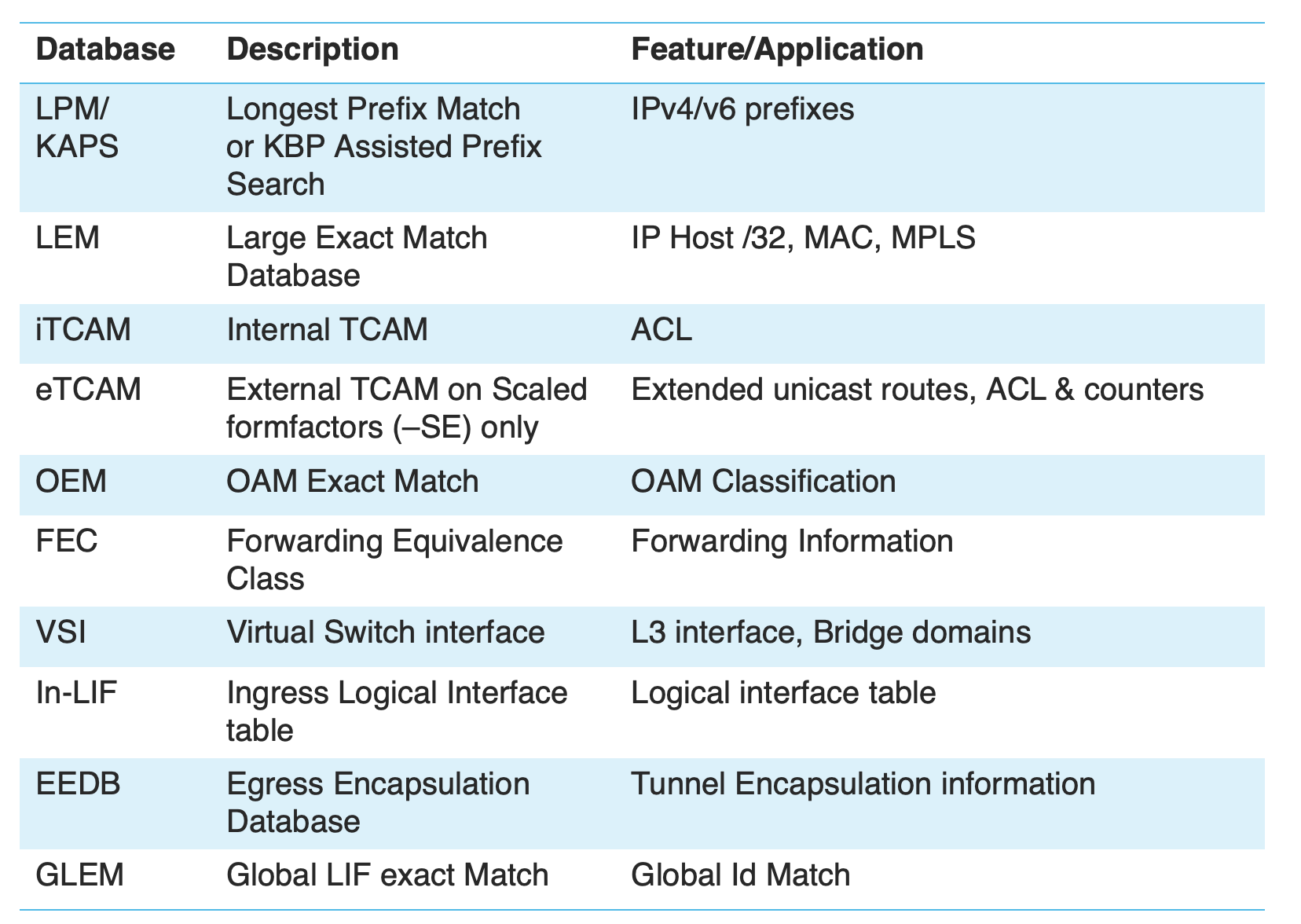

A quick refresher of Jericho ASIC’s on-chip resources seen in the below table. This shows the applications/features mapped to the important databases in the ASIC.

Table #1 Jericho on-chip databases

We use different ASICS from the same ASIC family on our NCS5500 and NCS5700 routers which are Qumran-MX, Jericho, Jericho+ and the latest Jericho2, Jericho2C, Qumran2C and Qumran2A ASICS. Our latest platform in works will come with new Jericho2C+ ASIC from Broadcom.

The on-chip databases mentioned in the table #1 are common across all these different ASICS. But the flexibility to carve resources for these databases are only supported with the NCS5700 systems or line-cards using Jericho2 family of ASICS (Jericho2, Jericho2C, Qumran2C and Qumran2A)

| NCS5500/5700 Products | MDB supported |

|---|---|

| Fixed Systems | NCS-57B1-6D24H-S NCS-57B1-5D24H-SE NCS-57C3-MOD-S NCS-57C3-MOD-SE-S NCS-57C1-48Q6D-S NCS-57D2-18DD-S* |

| Line cards Supported on NCS5504, NCS5508, NCS5516 (Native Mode only) | NC57-18DD-SE NC57-24DD NC57-36H-SE NC57-36H6D-S NC57-MOD-S NC57-48Q2D-S* NC57-48Q2D-SE-S* |

Table #2 NCS5700 PIDs support for MDB (* PIDs in works for future releases)

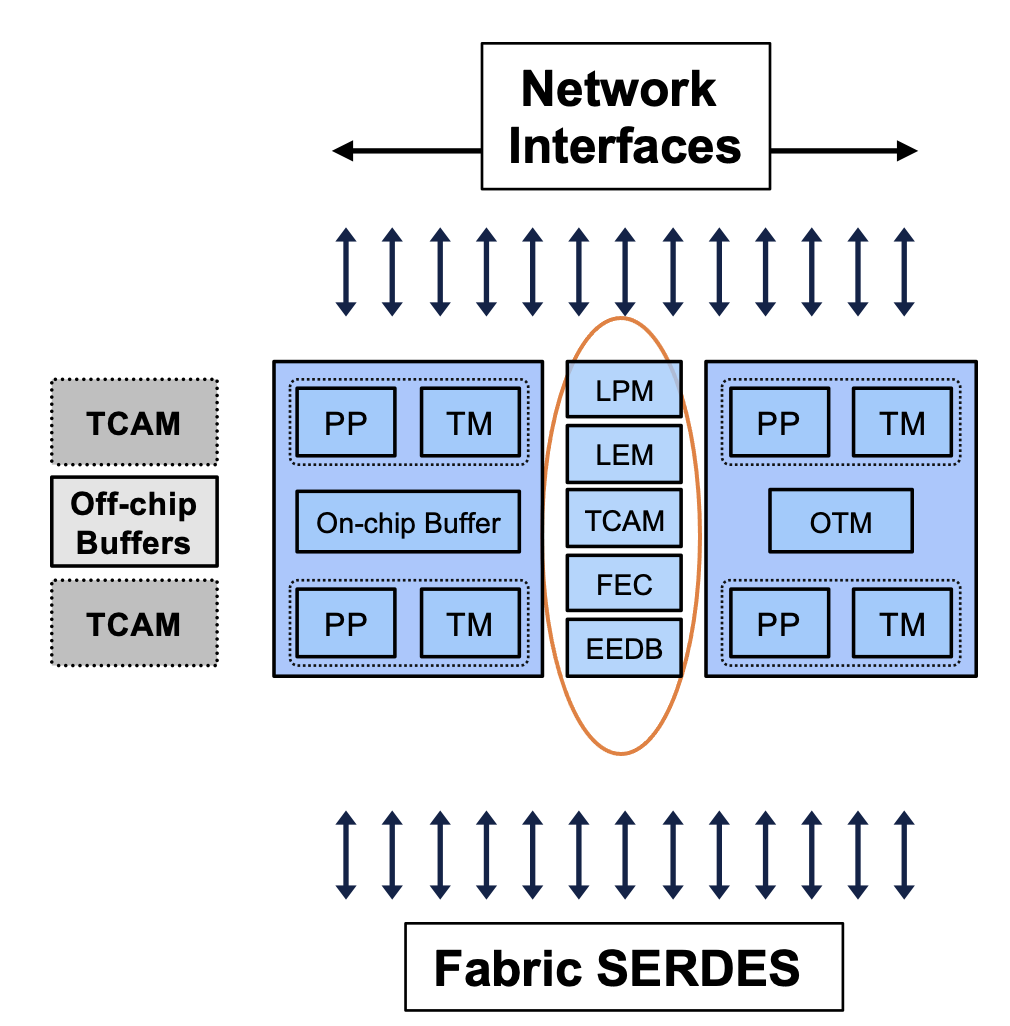

Let’s look into the forwarding ASIC layout. The blue boxes (which are circled) are some of the important on-chip memories which are used for storing information like IP prefixes, labels, MAC tables, next-hop information and more.

While we have other components like buffers and blocks which are used for packets buffering and processing won’t be impacted by MDB carving.

Picture #1 ASIC layout

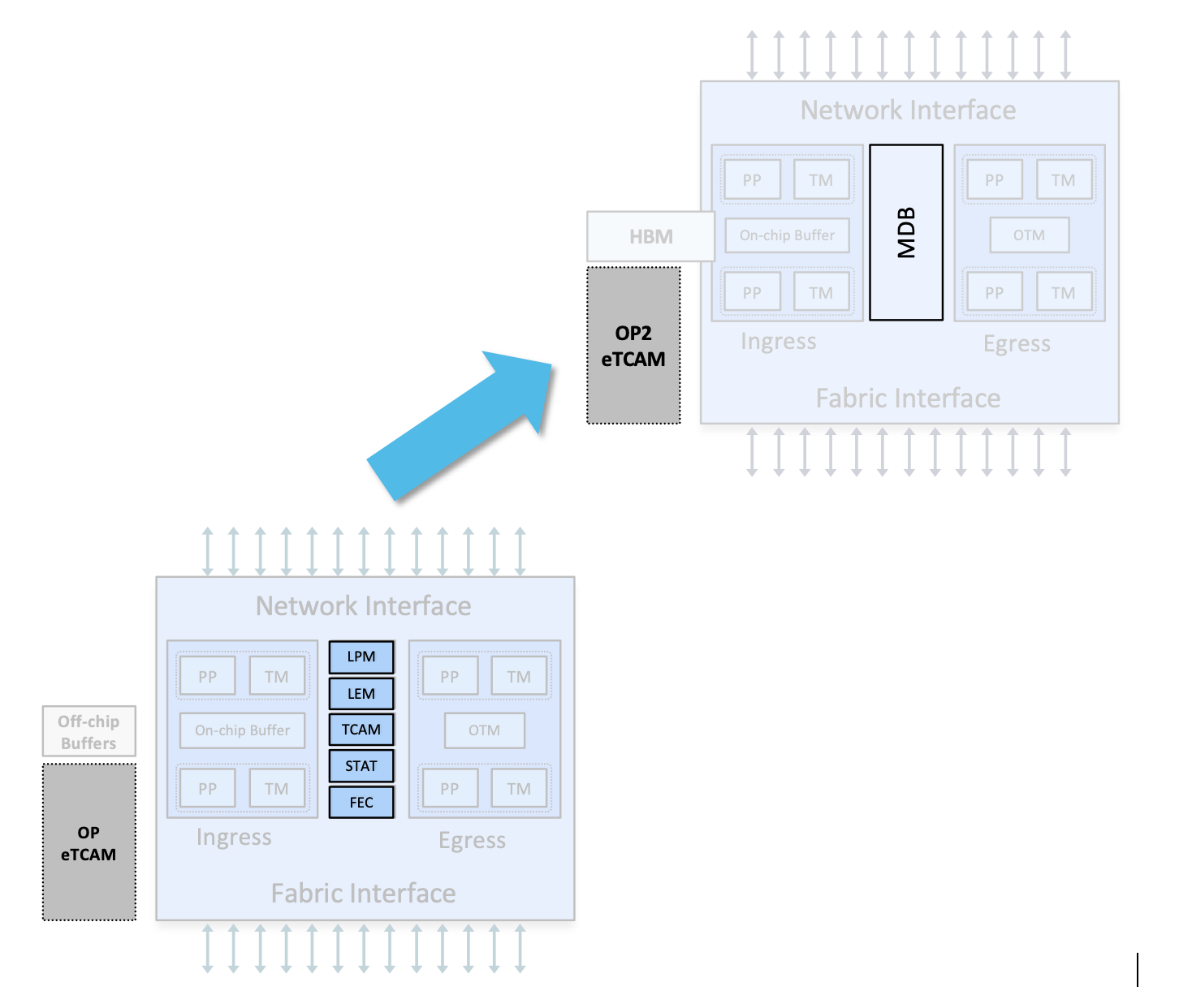

In Jericho2 based platforms we give user the flexibility to carve resources for the circled on-chip databases in picture #1 based on the MDB profiles which are configured during the system bootup. In the below pictorial representation (picture #2), we can see how the static carving of resources for on-chip databases have been made modular.

_Picture #2 MDB compatible Databases _

On the left we have Jericho1 based systems where the database carving is always static which is now made modular in the Jericho2 based platforms. If we have a scaled system with external-TCAM , the on-chip resource carving for MDB is designed in way considering the feature’s usage of the resources in the OP2 external TCAM.

Benefits of MDB

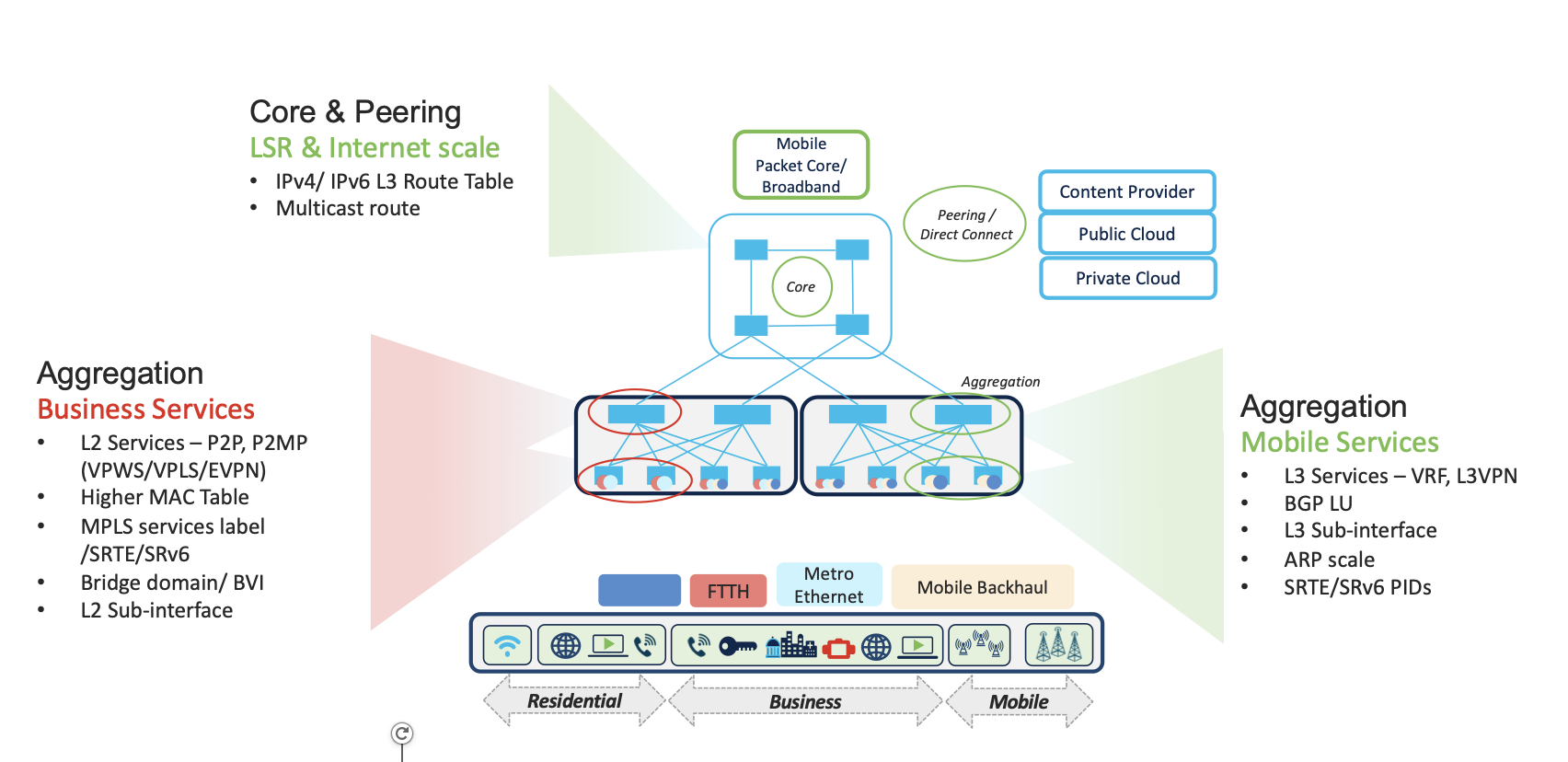

In today’s network deployments we position our routers in various usecases ranging from metro access, aggregation, core, Peering, DCI, Cloud Interconnect and more. Each usecase comes with it’s own interesting set of features and importantly the scale requirements.

Like in the Peering use case, where we position our high-density aggregation devices in the edge of the network with high eBGP sessions scale for metro, Datacenters & Internet peering. We need features rich in Layer3 like high-capacity Routing scale, security features VRFs, ACL, FS and more.

While for Business Aggregation use cases based on carrier-ethernet deployments are Layer2 heavy with requirements of higher MAC scale, L2VPN or Bridge-Domain scale

Picture #3 Network deployment use cases

Picture #3 Network deployment use cases

So, it’s obvious that the requirements are not same for these different use cases. Rather than just having a fixed profile why not give users some flexibility in carving resources to the databases which fits for their scale requirements. That flexibility is available with the MDB feature!

Path to MDB

During the initial release of NCS5700 platform, we started with shipping NCS57 based line cards on the NCS5500 system running in Compatible mode along with previous generation line cards based on Jericho1. We had default system profile to tune the scale and restrict the scale of system resources based on Jericho1 scale parameters.

Then in the subsequent release we started supporting native mode with all LCs on a modular NCS5500 being Jericho2(NCS57) for higher scale than the compatible mode. We supported both base and scale variants of Jericho2 LCs with custom scale profiles.

And in the next release(IOS-XR 7.3.1) we had the MDB infra developed in the IOS-XR software and introduced default profiles with higher scale. They were balanced (for base systems) and balanced-SE (for scale systems). And we made these as default profiles on the new SoC (system on chip) routers which were released in XR 7.3.1.

Please note the balanced/balanced-SE profiles were enabled by default and were not user configurable

Picture #4 Default MDB Profile - 7.3.1

Above picture #4 depicts the behavior during 7.3.1 release time on J2 based modular and fixed systems operating in native mode.

In XR 7.4.1, the balanced profiles were reincarnated as L3MAX and L3MAX-SE profiles with better scale optimizations. On NCS5500 modular systems on native mode, default was L3MAX and we introduced a “hw-module profile mdb l3max-se” to enable the L3MAX-SE if all the line cards on the system are scale (-SE) cards. https://www.cisco.com/c/en/us/td/docs/iosxr/ncs5500/system-setup/76x/b-system-setup-cg-ncs5500-76x/m-system-hw-profiles.html

On the SoC Jericho2 boxes, based on the availability of eTCAM we enable the right profile (base or SE) and they always operate in native mode.

Picture #5 Modular ncs5500: MDB modes during 7.4.1

Picture #5 Modular ncs5500: MDB modes during 7.4.1

Picture #6 SoC systems default modes

Picture #6 SoC systems default modes

Picture #7 Q2C based fixed system default mode in 7.5.2

Picture #7 Q2C based fixed system default mode in 7.5.2

Then in release XR 7.6.1 we came up with layer-2 feature centric L2MAX and L2MAX-SE profiles for the base and scale variants of NCS5700 routers and line-cards. The default mode of operation will be L3MAX (-SE) and if a user wishes to do L2 rich resource carving they are provided options to configure the L2MAX(-SE) profiles.

Please note, these MDB profiles are supported on our systems with J2, J2C, Q2C & Q2A ASICS. Also, MDB will be supported on the J2C+ based system being planned for XR 7.8.1

In latest releases (7.6.1 onwards) all the SoC and modular systems (in native mode) supports all 4 MDB profiles

| No | MDB | Systems config | external TCAM presense |

|---|---|---|---|

| 1 | L3MAX | Base LCs & Fixed Systems | No external TCAM |

| 2 | L3MAX-SE | Scale LCs & Fixed Systems | With external TCAM |

| 3 | L2MAX | Base LCs & Fixed Systems | No external TCAM |

| 4 | L2MAX-SE | Scale LCs & Fixed Systems | With external TCAM |

Table #3 MDB profiles

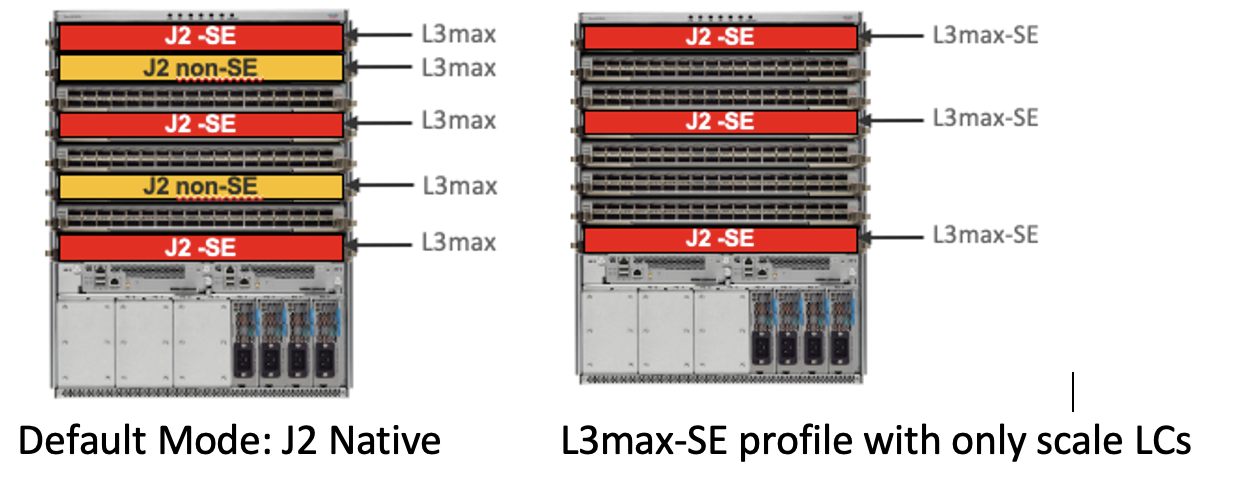

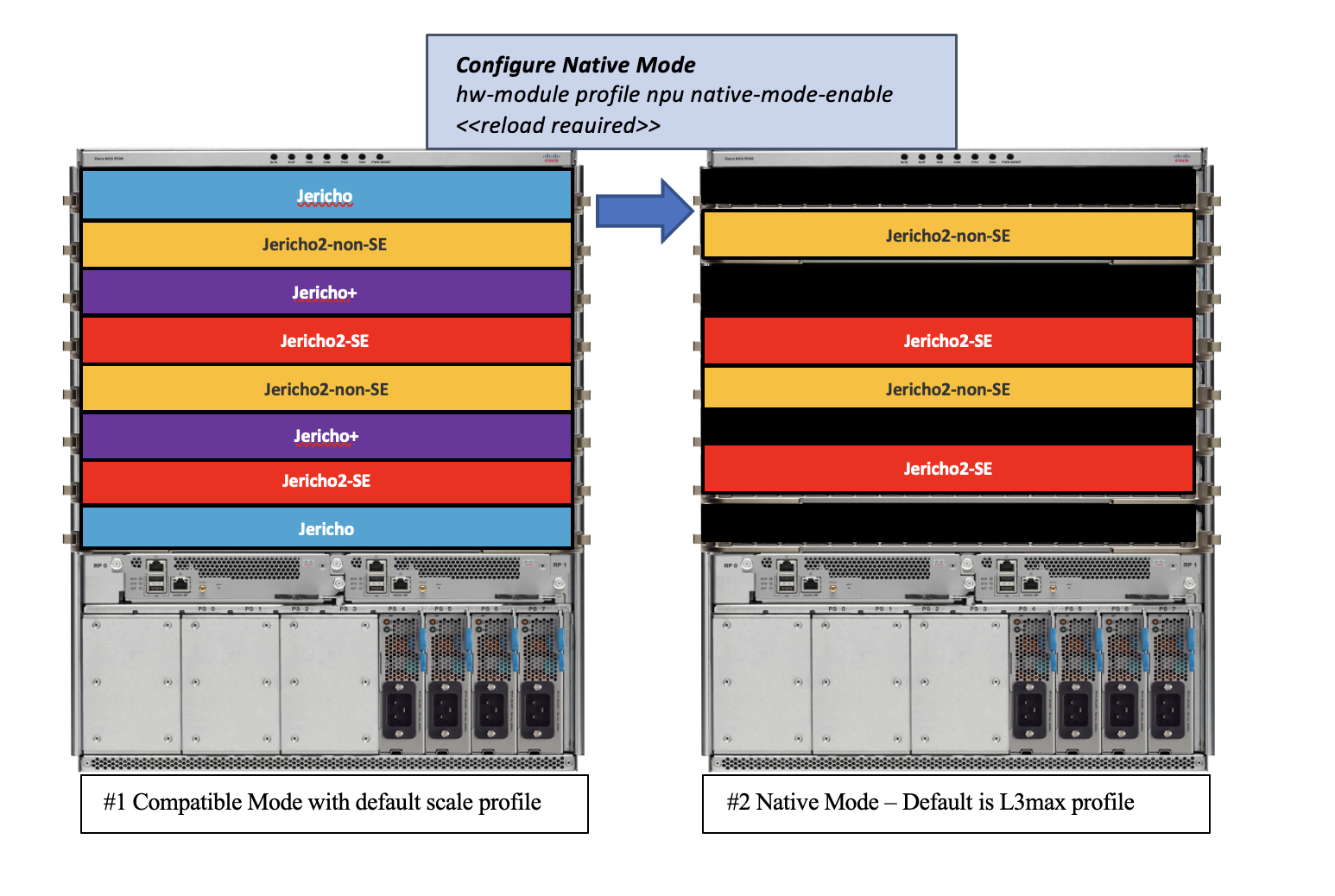

MDB on Modular systems

This is a bit tricky. By default, the NCS5500 systems boots up in compatible mode. We will need explicit “hw-module profile npu native-mode-enable” configuration to enable native mode provided we have all the line-cards being nc57 based.

With native mode configured (post reload,) by default system will operate in L3MAX mode in latest releases (7.4.1 and beyond).

Users are given options to configure any of the MDB profiles based on their card configurations and requirements. (where L2 profiles are included in 7.6.1)

Step by Step transition towards NCS5700 with MDB:

Let’s start with NCS5500 system having mix of Jericho1 and Jericho2 LCs. It will by default operate in compatible mode having a default scale profile.

- First step is to remove Jericho/Jericho+ LCs and convert it into Jericho2 only system.

- Next step is to configure “native mode” and reload the system for the mode to take effect and the MDB profile will set to L3max (This is the right profile if we have a mix of scale and base J2 LCs)

Picture #9 Compatible to native mode migration

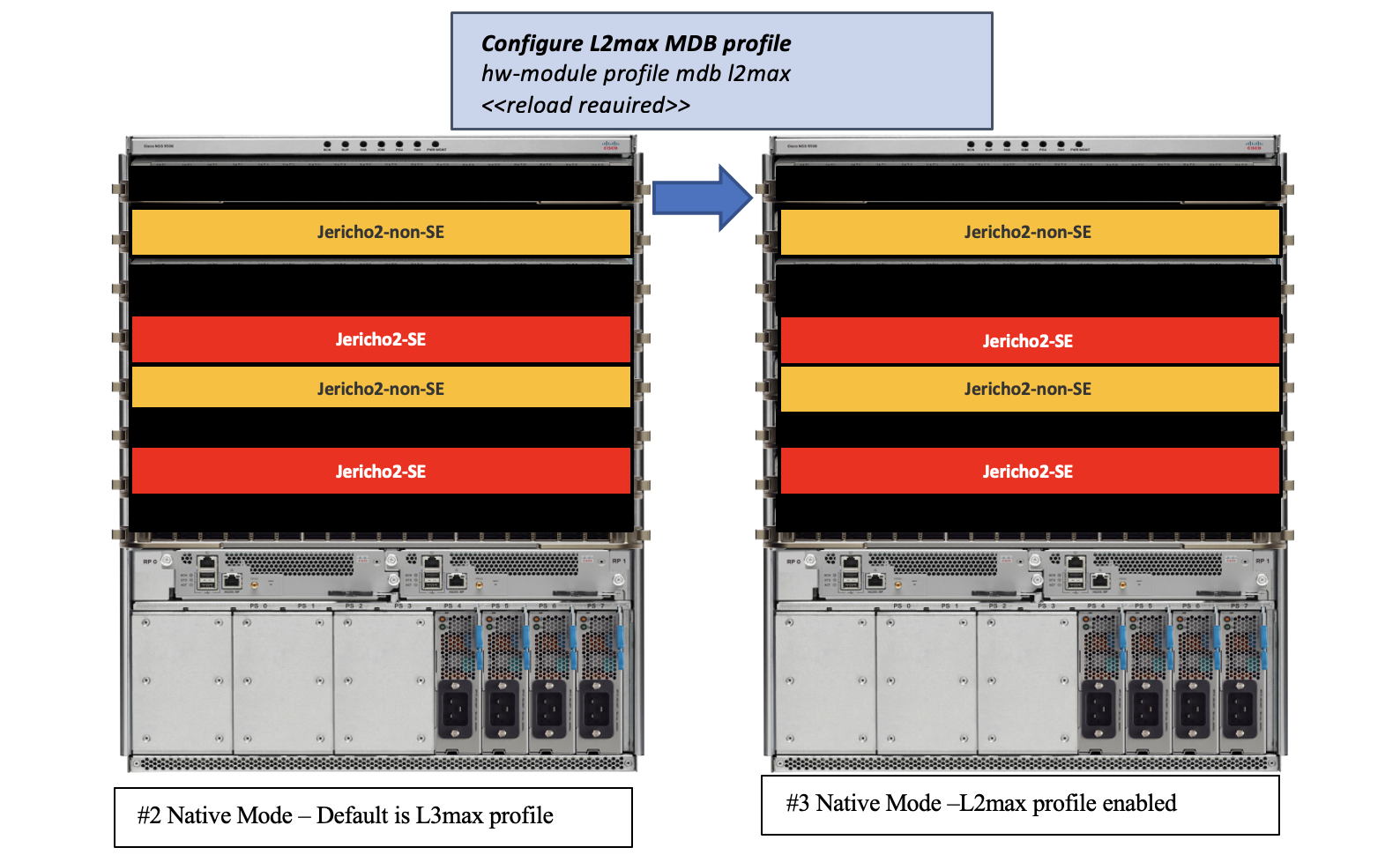

- In the above state #2 (with mixed scale and base J2 LCs), user can configure L2max profile based on their requirements

Picture #10 L2max MDB configuration

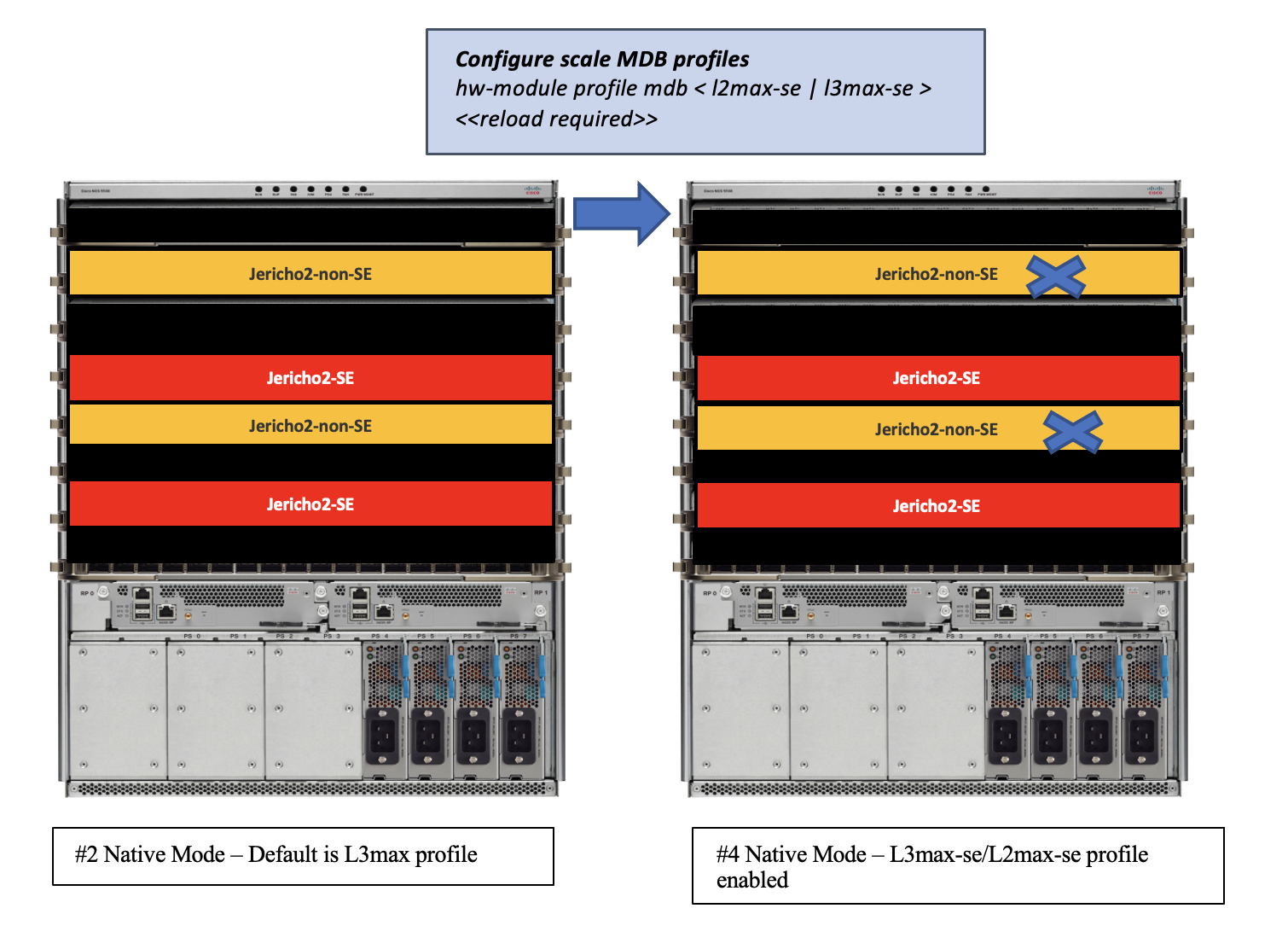

- If the user wants to operate only with the J2 scale line-cards to use the full potential of the scale and the extra features (ex: Flowspec) it offers, the l3max-se/l2max-se profiles can be enabled. If we have the base non-SE cards in the system, they won’t bootup as shown in below picture

Picture #11 L3max-SE/L2max-SE MDB configuration

We can also club the native mode conversation and new MDB profile configuration in a single reload. Ex- Step #1 to #3 or #4 can be achieved with a single reload.

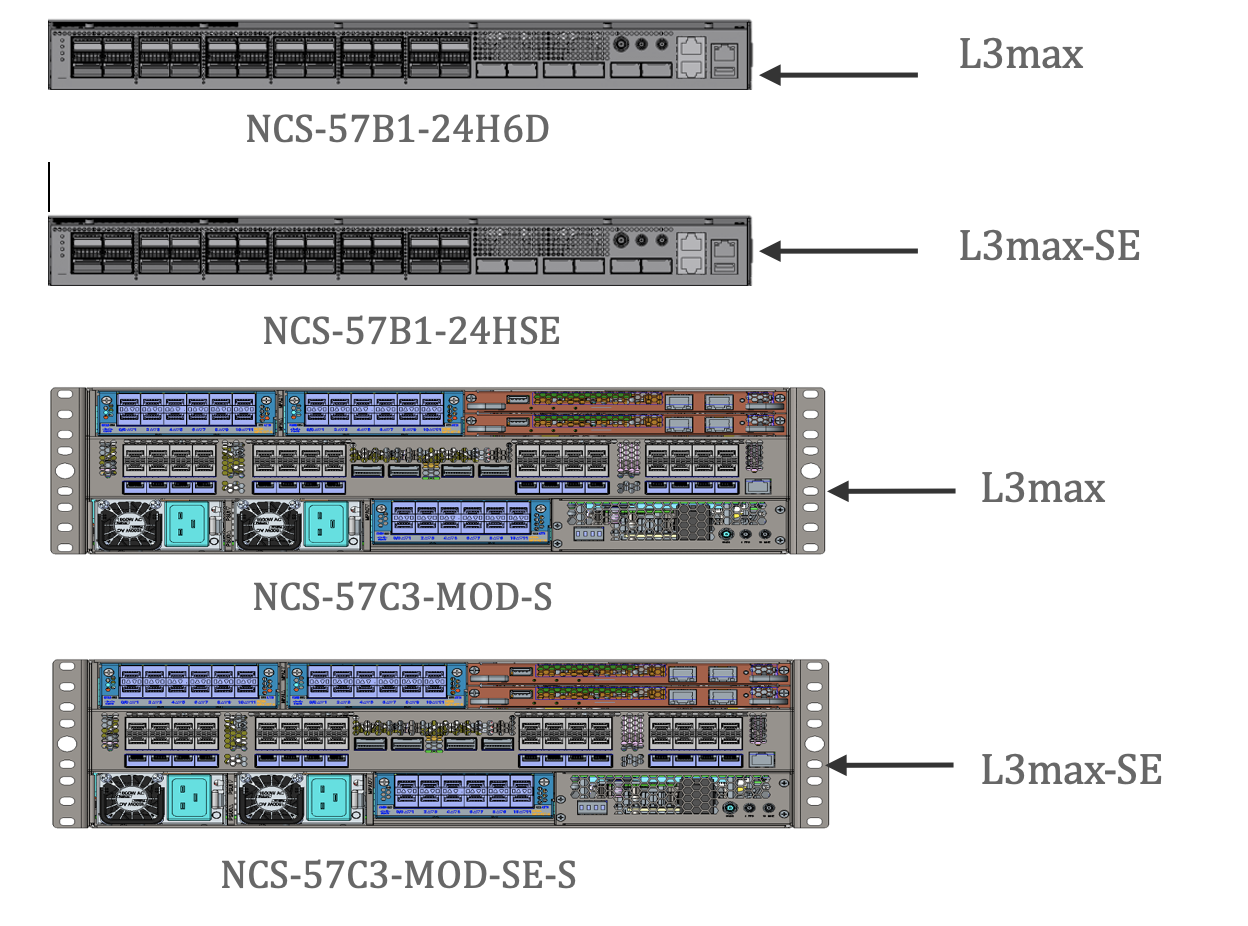

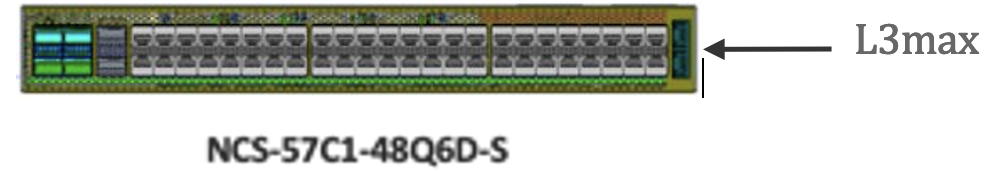

MDB on Fixed systems

This is straightforward. As the fixed NC5700 SoC systems always operate in native mode and comes up by default with L3max on base systems and L3max-SE on scale systems. Users are given options to configure L2max(-SE) profiles with a system reload.

| Platform | Default | Configurable Options(Recommended**) |

|---|---|---|

| NCS57B1-24H6D | L3MAX | L2MAX |

| NCS57B1-24HSE | L3MAX-SE | L2MAX-SE |

| NCS57C3-MOD-S | L3MAX | L2MAX |

| NCS57C3-MOD-SE-S | L3MAX-SE | L2MAX-SE |

| NCS57C1-48Q6D-S | L3MAX | L2MAX |

| N540-24Q8L2DD-SYS* | L3MAX* | L2MAX* |

Table #4 MDB options on fixed systems

*N540-24Q8L2DD-SYS (Q2A based) only ncs540 system to support MDB at present.

*Resource carving on Q2A is different from J2/J2C/Q2C based on resource availability

** On SE systems base profiles can be configured but not recommended to use low scale profile

Configuration & Verification

Configure Native Mode

hw-module profile npu native-mode-enable

Verify J2 Native mode - Only for Modular (NCS5504/08/16)

show hw-module profile npu-operating-mode

Native Mode

Configure MDB Profile

hw-module profile mdb l3max | l3max-se | l2max | l2max-se

Verify MDB Profile

show hw-module profile mdb-scale

MDB scale profile: l3max-se

Verify Resource Utilization

show controllers npu resources lem location 0/LC/CPU0

show controllers npu resources lpm location 0/LC/CPU0

show controllers npu resources exttcamipv4 | exttcamipv6 location 0/LC/CPU0 (for -SE)

show controllers npu resources fec location 0/LC/CPU0

show controllers npu resources encap location 0/LC/CPU0

show controllers npu external location 0/LC/CPU0 (for -SE)

Conclusion

We conclude here understanding the flexibility of MDB in the NCS5700 systems which provides the user with options to choose the resource carving based on their requirements.

Picture #12 Flexibility with MDB carving

Picture #12 Flexibility with MDB carving

As depicted in Picture#12, based on the profile carving we get more on resources carved for specific databases to support to certain higher scale requirements. On a broader level with L2MAX profiles we get more resources for applications mapped to L2 features like MAC scale, L2VPN etc.

While with L3MAX profiles we get higher resource carving for applications mapped to L3 features like routes, L3VPN etc.

Also these MDB profiles goes through the continuous process of fine-tuning to adapt to new technology areas like SRv6 and to accommodate the new critical use cases.

Please stay tuned for more updates!

Leave a Comment