Cisco 8000 FIB Scale

Introduction

Cisco 8000 routers have been widely adopted and deployed by service provider and cloud customers for core and peering roles. For those positions, FIB scale matters.

This article will document how Silicon One Q100 and Q200 based systems can support current and future BGP scale. Based on projected growth, tests will demonstrate they are also future proof. Last, a new feature introduced to increase FIB scale on Q200 will be demonstrated. Telemetry data will also be used to illustrate system capabilities.

Note: You can find Silicon One P100 FIB scale test here

What’s The FIB Scale

Forwarding Information Base was defined in RFC1812. This is what Fred Baker wrote in 1995:

Forwarding Information Base (FIB) The table containing the information necessary to forward IP Datagrams, in this document, is called the Forwarding Information Base. At minimum, this contains the interface identifier and next hop information for each reachable destination network prefix.

There is another old-school RFC3222 defining the FIB scale aspect:

Forwarding Information Base Size

Definition: Refers to the number of forwarding information base entries within a forwarding information base.

Discussion: The number of entries within a forwarding information base is one of the key elements that may influence the forwarding performance of a router. Generally, the more entries within the forwarding information base, the longer it could take to find the longest matching network prefix within the forwarding information base.

Measurement units:

Number of routesIssues:

See Also:

forwarding information base (5.3)

forwarding information base entry (5.4)

forwarding information base prefix distribution (5.7)

maximum forwarding information base size (6.1)

20 years later, those points still matter when it comes to validate FIB scale on a modern router. Not only the number of prefixes is important, but it’s also key to consider prefixes distribution during validation phase. Ideally, a real FIB should be replayed and certification should not rely on artificial lab scenario.

Until now, Cisco 8000 officially supports up to 2M IPv4 and 512k IPv6 prefixes in FIB. This is a multi-dimensional number meaning both address families can be programmed to these maximum values. Released in March 2023, IOS-XR 7.9.1 brings FIB scale increase enhancement and raises this number to 3M IPv4 and 1M IPv6 prefixes. This new feature is covered later in this article.

Did you know? ![]() A large-scale study realized in 2019 amongst 30 ISP and content providers revealed 95% of traffic was generated by less than 1000 BGP prefixes!

A large-scale study realized in 2019 amongst 30 ISP and content providers revealed 95% of traffic was generated by less than 1000 BGP prefixes!

Cisco 8000 FIB Implementation

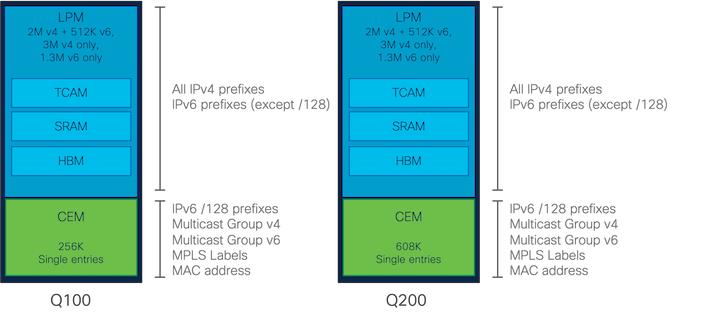

On Cisco Silicon One, the unicast FIB is stored into two major tables:

- Longest Prefix Match (LPM) database: stores IPv4 and IPv6 routes

- Central Exact Match (CEM) database: stores IPv6 host routes (/128)

Q100 and Q200 Default LPM and CEM allocation (courtesy CS Lee, Cisco)

Q100 and Q200 Default LPM and CEM allocation (courtesy CS Lee, Cisco)

LPM and CEM are physically located amongst different Q100 and Q200 ASIC memories, which can be on-die and off-die (e.g TCAM, SRAM, HBM). This is out of this article scope.

Did you know? ![]() Cisco 8100 systems use Silicon One ASICs which do not have HBM (Q200L, G100). This restricts buffering capacities but also FIB scale and is the reason why they are used for routed DC applications. Please refer to this deployment note for 8100 systems positioning.

Cisco 8100 systems use Silicon One ASICs which do not have HBM (Q200L, G100). This restricts buffering capacities but also FIB scale and is the reason why they are used for routed DC applications. Please refer to this deployment note for 8100 systems positioning.

FIB Scale Testing Methodology

FIB scale could have been tested artificially by injecting millions of consecutive prefixes. However, this does not match the field reality.

Instead, for the different tests performed in this article, a real customer production profile was used. A major Service Provider FIB was extracted from their live network and has been used to illustrate current resource utilization and validate target future growth.

Those production devices process a full BGP routing view. As of March 2023, this represents 935k IPv4 and 174k IPv6 prefixes.

On top of Internet routes, there is also a high number of internal routes. This customer runs a large-scale ISIS IGP with 28k IPv4 ISIS routes. In details, this IGP contains 18k loopbacks (/32) and 9k P2P links (/30 and /31). This backbone is dual stack and ISIS also carries 2.5k IPv6 prefixes distributed as 1.5k loopbacks (/128) and 1k P2P links (/126). Additional internal routes are carried over iBGP for both address families.

Table below summarizes prefixes origin, volume, and distribution:

| Prefixes | Current Scale IPv4 | Current Scale IPv6 |

|---|---|---|

| ISIS loopbacks | 18k | 1.5k |

| ISIS P2P & LAN | 10k | 1k |

| Total ISIS | 28k | 2.5k |

| BGP internal, including P2P (IPv4 /30, /31 & IPv6 /126, /127) | 80.5k, 40k | 34.5k, 1.6k |

| BGP Internet | 935k | 174k |

| Total FIB | 1.1M | 214k |

This FIB was compared to additional ones collected over the last few months from Cloud Providers and Service Provider deployments across Europe, North America and Asia. It’s representative of a typical deployment.

The testbed is configured with Cisco 8000 routers running latest IOS-XR version available (7.9.1) and a Cisco’s inhouse tool to generate routes (routem). Customer’s FIB is replayed in the lab and all prefixes are injected through BGP protocol.

Current Resources utilization

Let’s have a look at prefixes distribution first.

IPv4 distribution is the following:

RP/0/RP0/CPU0:8202-1#sh cef summary

Router ID is 192.0.2.37

IP CEF with switching (Table Version 0) for node0_RP0_CPU0

Load balancing: L4

Tableid 0xe0000000 (0x921fb410), Vrfid 0x60000000, Vrid 0x20000000, Flags 0x1019

Vrfname default, Refcount 1107623

1107580 routes, 0 protected, 0 reresolve, 0 unresolved (0 old, 0 new), 239237280 bytes

1107560 rib, 1 lsd, 15 aib, 0 internal, 2 interface, 4 special, 1 default routes

Prefix masklen distribution:

unicast: 61609 /32, 11224 /31, 38289 /30, 12009 /29, 3964 /28, 817 /27

1058 /26, 4128 /25, 614055 /24, 94219 /23, 108732 /22, 51033 /21

41683 /20, 24931 /19, 13801 /18, 8218 /17, 13499 /16, 2037 /15

1203 /14, 589 /13, 304 /12, 101 /11, 40 /10, 13 /9

17 /8 , 1 /0

broadcast: 4 /32

multicast: 1 /24, 1 /4

While IPv6 distribution as follows:

RP/0/RP0/CPU0:8202-1#sh cef ipv6 summary

Router ID is 192.0.2.37

IP CEF with switching (Table Version 0) for node0_RP0_CPU0

Load balancing: L4

Tableid 0xe0800000 (0x928b0778), Vrfid 0x60000000, Vrid 0x20000000, Flags 0x1019

Vrfname default, Refcount 214659

214637 routes, 0 protected, 0 reresolve, 0 unresolved (0 old, 0 new), 46361592 bytes

214624 rib, 0 lsd, 7 aib, 0 internal, 0 interface, 6 special, 1 default routes

Prefix masklen distribution:

unicast: 4610 /128, 144 /127, 2514 /126, 47 /125, 1042 /124, 2 /120

148 /112, 183 /96 , 5367 /64 , 20673 /62 , 24 /61 , 6 /60

1 /59 , 22 /58 , 5 /57 , 314 /56 , 2 /53 , 2 /52

2 /51 , 1 /49 , 105403 /48 , 1979 /47 , 2345 /46 , 1120 /45

11066 /44 , 2617 /43 , 4549 /42 , 1312 /41 , 9970 /40 , 960 /39

1527 /38 , 873 /37 , 4778 /36 , 759 /35 , 2054 /34 , 2432 /33

20941 /32 , 206 /31 , 519 /30 , 3887 /29 , 122 /28 , 20 /27

15 /26 , 8 /25 , 27 /24 , 7 /23 , 6 /22 , 3 /21

14 /20 , 1 /19 , 1 /16 , 1 /10 , 1 /0

multicast: 1 /128, 1 /104, 3 /16 15 /26 , 8 /25 , 27 /24 , 7 /23 , 6 /22 , 3 /21

14 /20 , 1 /19 , 1 /16 , 1 /10 , 1 /0

multicast: 1 /128, 1 /104, 3 /16

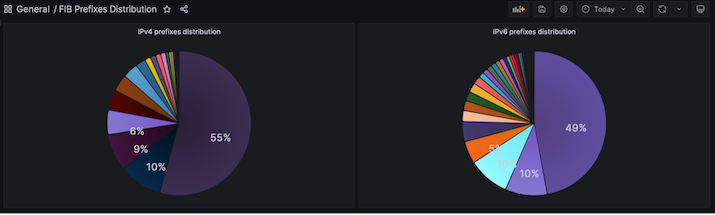

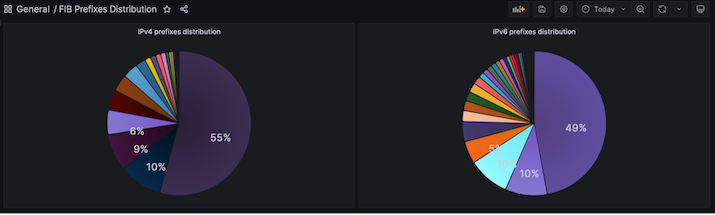

This is a visual representation of the FIB distribution using telemetry:

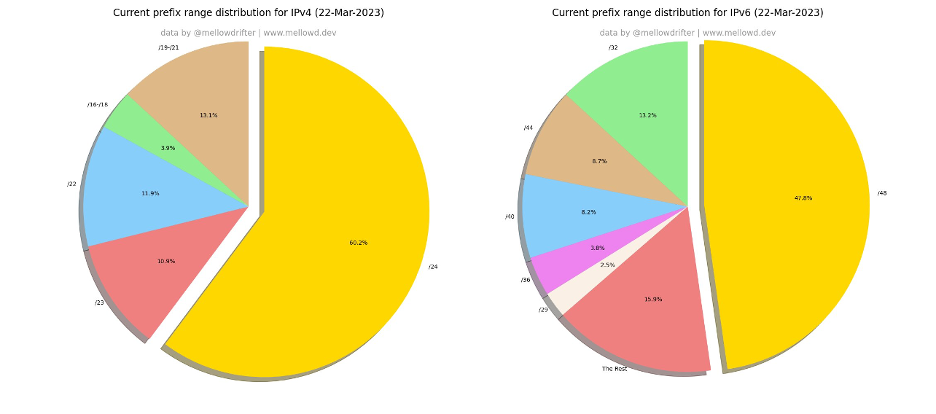

This corresponds to @bgp4_table and @bgp6_table daily tweets:

BGP prefixes distribution as seen by @bgp4_table and @bgp6_table (courtesy Darren O’Connor)

BGP prefixes distribution as seen by @bgp4_table and @bgp6_table (courtesy Darren O’Connor)

Q100

For this test, a Cisco 8202 fixed system is used. It uses a single Silicon One Q100 ASIC.

For Q100, current LPM utilization is 52%:

RP/0/RP0/CPU0:8202-1#sh controllers npu resources lpmtcam location 0/RP0/CPU0

HW Resource Information

Name : lpm_tcam

Asic Type : Q100

NPU-0

OOR Summary

Estimated Max Entries : 100

Red Threshold : 95 %

Yellow Threshold : 80 %

OOR State : Green

OOR State Change Time : 2023.Mar.20 16:03:51 UTC

OFA Table Information

(May not match HW usage)

iprte : 1107561

ip6rte : 210022

ip6mcrte : 0

ipmcrte : 0

Current Hardware Usage

Name: lpm_tcam

Estimated Max Entries : 100

Total In-Use : 52 (52 %)

OOR State : Green

OOR State Change Time : 2023.Mar.20 16:03:51 UTC

Name: v4_lpm

Total In-Use : 1107581

Name: v6_lpm

Total In-Use : 210025

It’s expected to have marginal CEM occupancy as number of /128 prefixes is low:

RP/0/RP0/CPU0:8202-1#sh controllers npu resources centralem location 0/RP0/CPU0

HW Resource Information

Name : central_em

Asic Type : Q100

NPU-0

OOR Summary

Estimated Max Entries : 100

Red Threshold : 95 %

Yellow Threshold : 80 %

OOR State : Green

OFA Table Information

(May not match HW usage)

brmac : 0

iprte : 17

ip6rte : 4610

mplsrte : 5

puntlptspolicer : 92

ipmcrte : 0

ip6mcrte : 0

aclpolicer : 0

Current Hardware Usage

Name: central_em

Estimated Max Entries : 100

Total In-Use : 3 (3 %)

OOR State : Green

Rest of this article will focus on LPM only.

Q200

For this test, a 88-LC0-36FH linecard is used inside a distributed system. It uses 3 x Silicon One Q200 NPUs.

LPM utilization is slightly lower with 41% resources consumed:

RP/0/RP1/CPU0:8812-1#sh controllers npu resources lpmtcam location 0/1/CPU0

HW Resource Information

Name : lpm_tcam

Asic Type : Q200

NPU-0

OOR Summary

Estimated Max Entries : 100

Red Threshold : 95 %

Yellow Threshold : 80 %

OOR State : Green

OFA Table Information

(May not match HW usage)

iprte : 1107694

ip6rte : 210110

ip6mcrte : 0

ipmcrte : 0

Current Hardware Usage

Name: lpm_tcam

Estimated Max Entries : 100

Total In-Use : 41 (41 %)

OOR State : Green

Name: v4_lpm

Total In-Use : 1107748

Name: v6_lpm

Total In-Use : 210156

NPU-1

OOR Summary

Estimated Max Entries : 100

Red Threshold : 95 %

Yellow Threshold : 80 %

OOR State : Green

OFA Table Information

(May not match HW usage)

iprte : 1107694

ip6rte : 210110

ip6mcrte : 0

ipmcrte : 0

Current Hardware Usage

Name: lpm_tcam

Estimated Max Entries : 100

Total In-Use : 41 (41 %)

OOR State : Green

Name: v4_lpm

Total In-Use : 1107748

Name: v6_lpm

Total In-Use : 210156

NPU-2

OOR Summary

Estimated Max Entries : 100

Red Threshold : 95 %

Yellow Threshold : 80 %

OOR State : Green

OFA Table Information

(May not match HW usage)

iprte : 1107694

ip6rte : 210110

ip6mcrte : 0

ipmcrte : 0

Current Hardware Usage

Name: lpm_tcam

Estimated Max Entries : 100

Total In-Use : 41 (41 %)

OOR State : Green

Name: v4_lpm

Total In-Use : 1107748

Name: v6_lpm

Total In-Use : 210156

Info: Inside a 8800 distributed system, LPM is programmed across all linecards and all NPUs.

Future BGP Growth Support

Now that resources utilization has been checked against a current production deployment for both Q100 and Q200 ASICs, it’s time to simulate 5-year growth. This will be mainly driven by the BGP table growth.

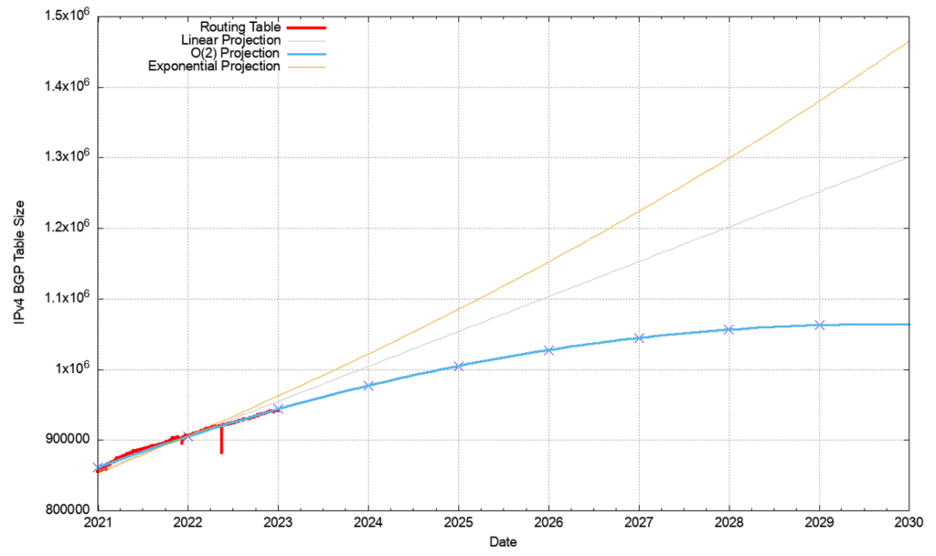

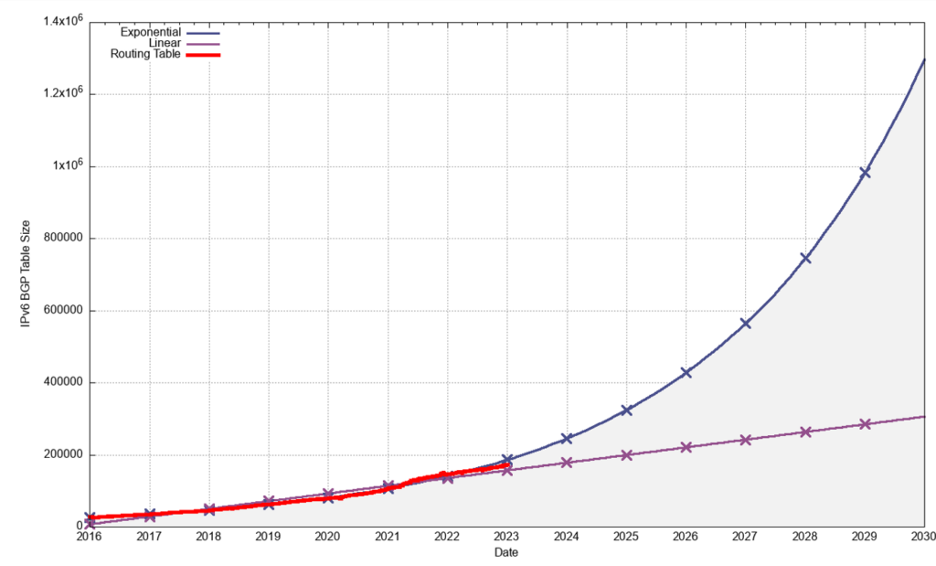

APNIC provides BGP table forecasts in its yearly BGP table report.

For IPv4, data have shown future growth follows a linear model with 150 additional prefixes daily. It’s expected to have ~ 1.2M IPv4 routes in 2028. This is the value which will be tested.

IPv4 BGP table predictions (courtesy Geoff Huston, APNIC)

For IPv6, things are more complex: linear and exponential models give between 400k and 1.2M IPv6 routes in January 2028, which is a huge difference. An average value of 800k will be tested.

Projections of IPv6 BGP table size (courtesy Geoff Huston, APNIC)

Projections of IPv6 BGP table size (courtesy Geoff Huston, APNIC)

To make the future FIB realistic enough, current prefixes distribution will be applied for additional injected prefixes. This means most additional IPv4 prefixes will be driven by /24 which are disaggregated from bigger blocks, and most additional IPv6 will be driven by recently allocated and advertised /48, /32, /44 etc.

Q100

With this 2028 profile, Q100 LPM utilization reaches 94%. It’s interesting to note system does not enter into Red Out of Resource state (95% utilization) and there are still free LPM entries:

RP/0/RP0/CPU0:8202-1#sh controllers npu resources lpmtcam location 0/RP0/CPU0

HW Resource Information

Name : lpm_tcam

Asic Type : Q100

NPU-0

OOR Summary

Estimated Max Entries : 100

Red Threshold : 95 %

Yellow Threshold : 80 %

OOR State : Yellow

OOR State Change Time : 2023.Mar.23 07:28:01 UTC

OFA Table Information

(May not match HW usage)

iprte : 1398110

ip6rte : 865460

ip6mcrte : 0

ipmcrte : 0

Current Hardware Usage

Name: lpm_tcam

Estimated Max Entries : 100

Total In-Use : 94 (94 %)

OOR State : Yellow

OOR State Change Time : 2023.Mar.23 07:28:01 UTC

Name: v4_lpm

Total In-Use : 1398131

Name: v6_lpm

Total In-Use : 865455

10 years after FCS, Q100 based systems will still be able to handle the BGP full view and extra prefixes.

Q200

With the 2028 profile, Q200 has more room for margin with 84% LPM utilization:

RP/0/RP1/CPU0:8812-1#sh controllers npu resources lpmtcam location 0/1/CPU0

HW Resource Information

Name : lpm_tcam

Asic Type : Q200

NPU-0

OOR Summary

Estimated Max Entries : 100

Red Threshold : 95 %

Yellow Threshold : 80 %

OOR State : Yellow

OOR State Change Time : 2023.Mar.23 14:13:53 UTC

OFA Table Information

(May not match HW usage)

iprte : 1398243

ip6rte : 865548

ip6mcrte : 0

ipmcrte : 0

Current Hardware Usage

Name: lpm_tcam

Estimated Max Entries : 100

Total In-Use : 84 (84 %)

OOR State : Yellow

OOR State Change Time : 2023.Mar.23 14:13:53 UTC

Name: v4_lpm

Total In-Use : 1398297

Name: v6_lpm

Total In-Use : 865594

snip

With 84% utilization, it’s also expected to hit Yellow threshold (default is 80%).

8000 FIB Scale Increase

Starting IOS-XR 7.9.1, a new hw-module profile has been introduced to increase Cisco 8000 FIB scale up to 3M IPv4 and 1M IPv6 prefixes: hw-module profile route scale lpm tcam-banks

This profile is only supported on Q200 ASICs. System must be reloaded after activation:

RP/0/RP0/CPU0:8201-32FH(config)#hw-module profile route scale lpm tcam-banks

Mon Mar 20 22:05:53.261 UTC

In order to activate/deactivate this Scale LPM TCAM Banks profile, you must manually reload the chassis/all line cards

RP/0/RP0/CPU0:8201-1(config)#commit

Mon Mar 20 22:05:56.956 UTC

RP/0/RP0/CPU0:Mar 20 22:05:56.994 UTC: npu_drvr[316]: %FABRIC-NPU_DRVR-3-HW_MODULE_PROFILE_CHASSIS_CFG_CHANGED : Hw-module profile config changed for "route scale lpm high", do chassis reload to get the most recent config updated

ASIC resources are finite: once the profile is activated, some TCAM banks previously allocated for classification ACL will be used to store prefixes. Consequence is ACL scale is reduced (from 9.5k IPv4 entries to 7.5k entries, or from 5k IPv6 entries to 4k entries). It also impacts other features leveraging TCAM classification (BGP Flowspec, LPTS).

This is what LPM utilization looks like for the same 5-year growth projection:

RP/0/RP0/CPU0:8201-32FH#sh controllers npu resources lpmtcam location 0/RP0/CPU0

Thu Mar 23 14:14:56.905 UTC

HW Resource Information

Name : lpm_tcam

Asic Type : Q200

NPU-0

OOR Summary

Estimated Max Entries : 100

Red Threshold : 95 %

Yellow Threshold : 80 %

OOR State : Green

OFA Table Information

(May not match HW usage)

iprte : 1398116

ip6rte : 865463

ip6mcrte : 0

ipmcrte : 0

Current Hardware Usage

Name: lpm_tcam

Estimated Max Entries : 100

Total In-Use : 42 (42 %)

OOR State : Green

Name: v4_lpm

Total In-Use : 1398141

Name: v6_lpm

Total In-Use : 865467

With only 42% LPM utilization, this profile gives even more room for growth for 2030 and beyond.

Current (2023) utilization is illustrated in next section with telemetry.

FIB Telemetry

CEF prefixes distribution can be collected with following CEF sensor-group:

sensor-group CEF

sensor-path Cisco-IOS-XR-fib-common-oper:fib/nodes/node/protocols/protocol/fib-summaries/fib-summary/prefix-masklen-distribution/unicast-prefixes

When using telegraf, additional configuration must be used to treat mask length as a tag:

embedded_tags = ["Cisco-IOS-XR-fib-common-oper:fib/nodes/node/protocols/protocol/fib-summaries/fib-summary/prefix-masklen-distribution/unicast-prefix/mask-length"]

Here is sample Grafana visualization:

Cisco 8000 LPM TCAM and other NPU resources can be monitored with Open Forwarding Agent (OFA) sensor-group:

sensor-group OFA

sensor-path Cisco-IOS-XR-platforms-ofa-oper:ofa

As CEF, an extra configuration must be added to create tags when using telegraf:

embedded_tags = [

"Cisco-IOS-XR-platforms-ofa-oper:ofa/stats/nodes/node/Cisco-IOS-XR-8000-platforms-npu-resources-oper:hw-resources-datas/hw-resources-data/npu-hwr/bank/bank-name",

"Cisco-IOS-XR-platforms-ofa-oper:ofa/stats/nodes/node/Cisco-IOS-XR-8000-platforms-npu-resources-oper:hw-resources-datas/hw-resources-data/npu-hwr/bank/lt-hwr/name"

]

Here is sample Grafana visualization of the current 2023 profile on a Q200 system:

Conclusion

These tests measured Cisco 8000 current and future FIB scale. While there is no immediate need, the new FIB scale increase feature was demonstrated to support even higher scale. Resources monitoring and visualization was done using both traditional CLI and streaming telemetry techniques. This ultimately confirms 8000 routers can deal with peering and core FIB requirements.

Acknowledgement

Special thanks to Pamela Krishnamachary, Uzair Rehman and Selvam Ramanathan.

Leave a Comment