XR App-hosting architecture: Quick Look!

If you’ve been following the set of tutorials in the XR toolbox series:

You might have noticed that we haven’t actually delved into the internal architecture of IOS-XR. While there are several upcoming documents that will shed light on the deep internal workings of IOS-XR, I thought I’ll take a quick stab at the internals for the uninitiated.

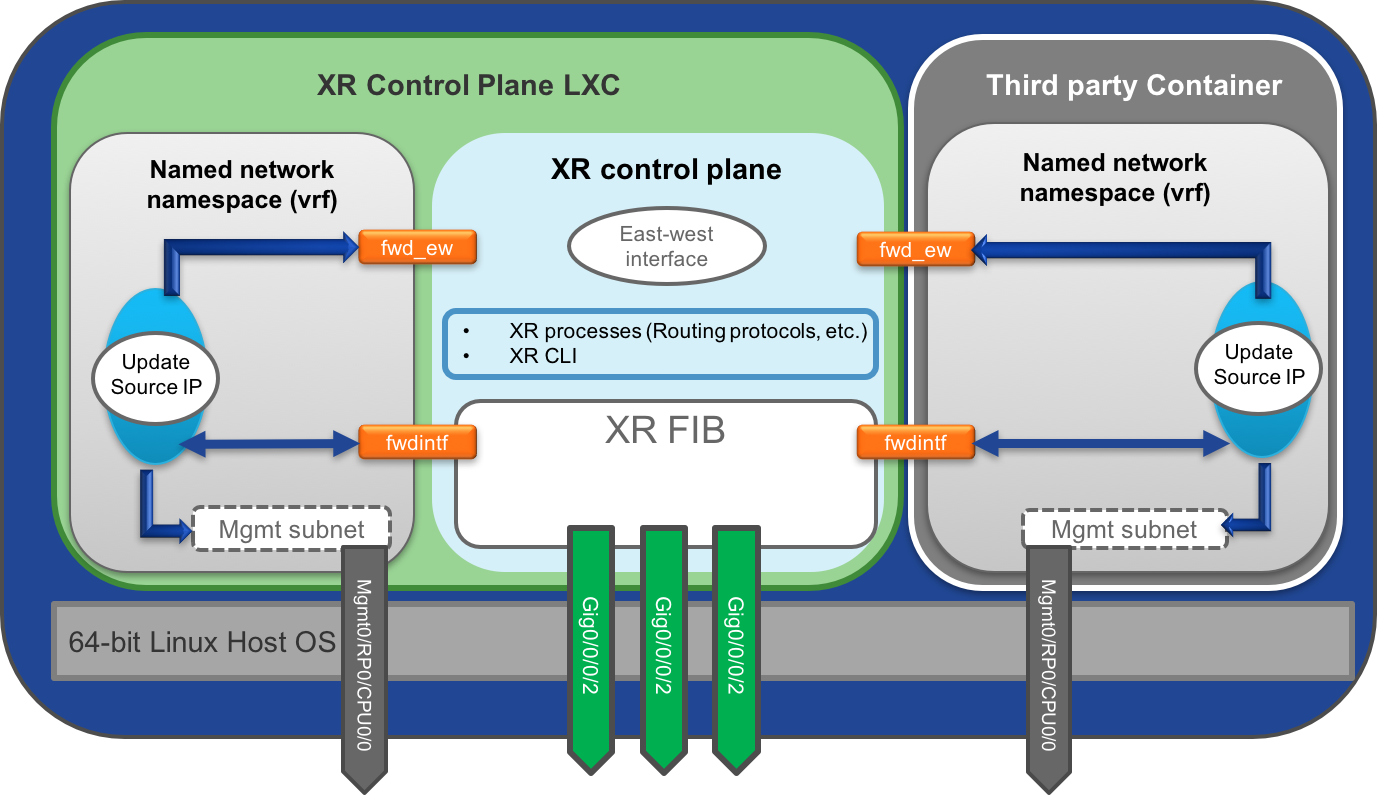

This is what the internal software architecture and plumbing, replete with the containers, network namespaces and XR interfaces, looks like:

Alright, back up. The above figure seems pretty daunting to understand, so let’s try to deconstruct it:

- At the bottom of the figure, in gray, we have the host (hypervisor) linux environment. This is a 64-bit linux kernel running the Windriver linux 7 (WRL7) distribution. The rest of the components run as containers (LXCs) on top of the host.

- In green, we see the container called the XR Control plane LXC (or XR LXC). This runs a Windriver Linux 7 (WRL7) environment as well and contains the XR control plane and the XR linux environment:

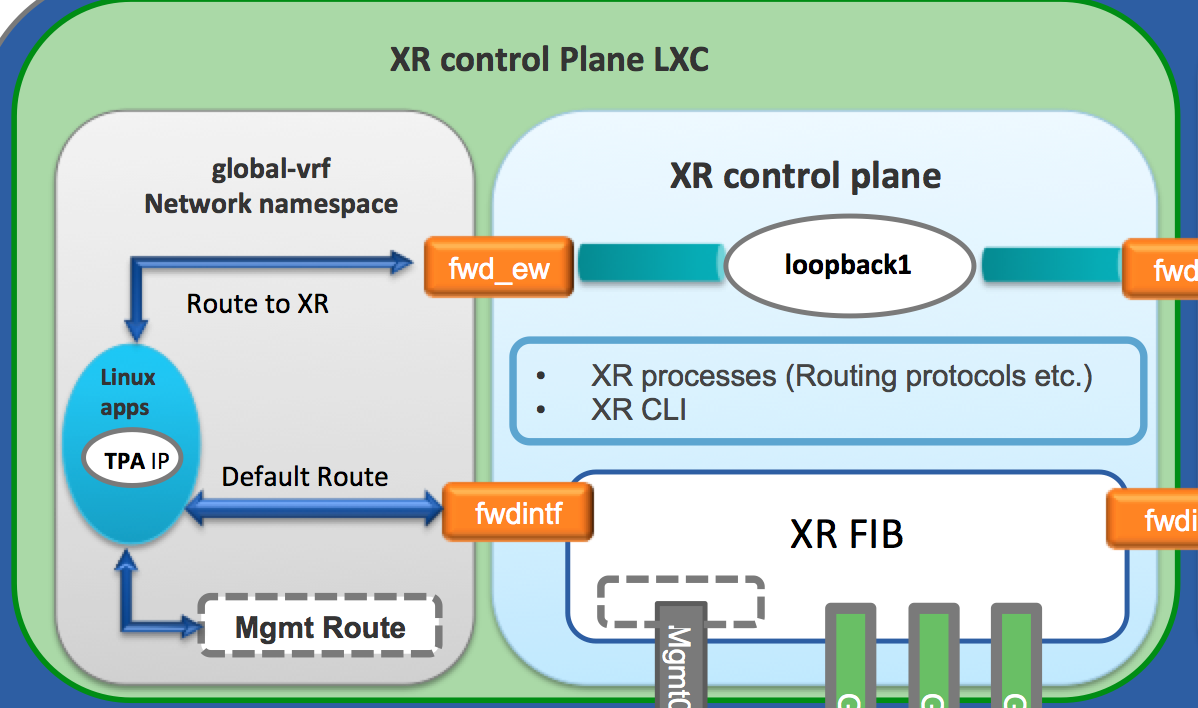

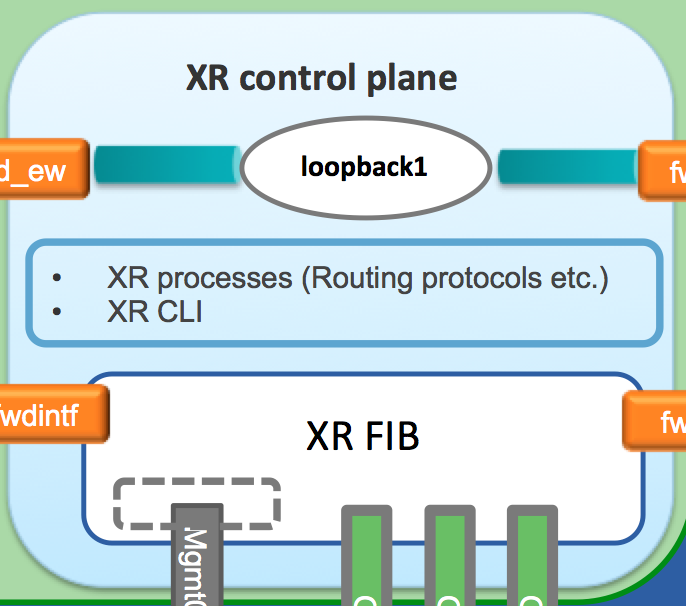

- Inside the XR control plane LXC, if we zoom in further, the XR control plane processes are represented distinctly in blue as shown below. This is where the XR routing protocols like BGP, OSPF etc. run. The XR CLI presented to the user is also one of the processes.

-

See the gray box inside the XR control plane LXC ? This is the XR linux shell.

P.S. This is what you drop into when you issue a

vagrant ssh[*].

Another way to get into the XR linux shell is by issuing abashcommand in XR CLI.The XR linux shell that the user interacts with is really the

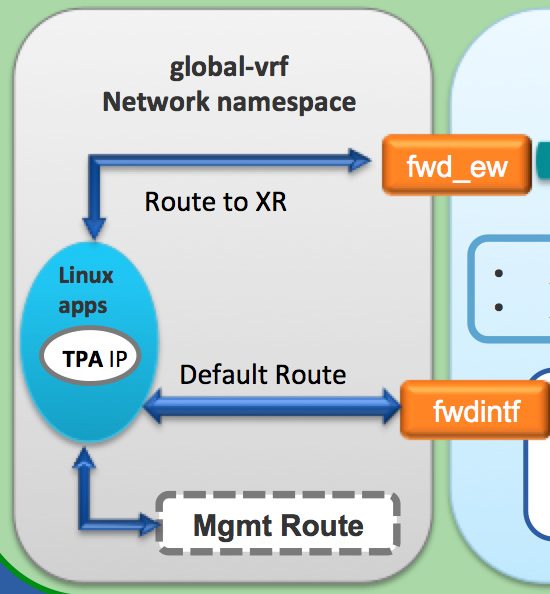

global-vrfnetwork namespace inside the control plane container. This corresponds to the global/default-vrf in IOS-XR.Only the interfaces in global/default vrf in XR appear in the XR linux shell today when you issue an ifconfig:

RP/0/RP0/CPU0:rtr1# RP/0/RP0/CPU0:rtr1# RP/0/RP0/CPU0:rtr1#show ip int br Sun Jul 17 11:52:15.049 UTC Interface IP-Address Status Protocol Vrf-Name Loopback0 1.1.1.1 Up Up default GigabitEthernet0/0/0/0 10.1.1.10 Up Up default GigabitEthernet0/0/0/1 11.1.1.10 Up Up default MgmtEth0/RP0/CPU0/0 10.0.2.15 Up Up default RP/0/RP0/CPU0:rtr1# RP/0/RP0/CPU0:rtr1# RP/0/RP0/CPU0:rtr1#bash Sun Jul 17 11:52:22.904 UTC [xr-vm_node0_RP0_CPU0:~]$ [xr-vm_node0_RP0_CPU0:~]$ifconfig Gi0_0_0_0 Link encap:Ethernet HWaddr 08:00:27:e0:7f:bb inet addr:10.1.1.10 Mask:255.255.255.0 inet6 addr: fe80::a00:27ff:fee0:7fbb/64 Scope:Link UP RUNNING NOARP MULTICAST MTU:1514 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:546 errors:0 dropped:3 overruns:0 carrier:1 collisions:0 txqueuelen:1000 RX bytes:0 (0.0 B) TX bytes:49092 (47.9 KiB) Gi0_0_0_1 Link encap:Ethernet HWaddr 08:00:27:26:ca:9c inet addr:11.1.1.10 Mask:255.255.255.0 inet6 addr: fe80::a00:27ff:fe26:ca9c/64 Scope:Link UP RUNNING NOARP MULTICAST MTU:1514 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:547 errors:0 dropped:3 overruns:0 carrier:1 collisions:0 txqueuelen:1000 RX bytes:0 (0.0 B) TX bytes:49182 (48.0 KiB) Mg0_RP0_CPU0_0 Link encap:Ethernet HWaddr 08:00:27:ab:bf:0d inet addr:10.0.2.15 Mask:255.255.255.0 inet6 addr: fe80::a00:27ff:feab:bf0d/64 Scope:Link UP RUNNING NOARP MULTICAST MTU:1514 Metric:1 RX packets:210942 errors:0 dropped:0 overruns:0 frame:0 TX packets:84664 errors:0 dropped:0 overruns:0 carrier:1 collisions:0 txqueuelen:1000 RX bytes:313575212 (299.0 MiB) TX bytes:4784245 (4.5 MiB) ---------------------------------- snip output -----------------------------------------Any Linux application hosted in this environment shares the process space with XR, and we refer to it as a

native application. - The FIB is programmed by the XR control plane exclusively. The global-vrf network namespace only sees a couple of routes by default:

A default route pointing to XR FIB. This way any packet with an unknown destination is handed-over by a linux application to XR for routing. This is achieved through a special interface called

fwdintfas shown in the figure above.Routes in the subnet of the Management Interface: Mgmt0/RP0/CPU0. The management subnet is local to the global-vrf network namespace.

To view these routes, simply issue an

ip routein the XR linux shell:AKSHSHAR-M-K0DS:native-app-bootstrap akshshar$ vagrant ssh rtr xr-vm_node0_RP0_CPU0:~$ xr-vm_node0_RP0_CPU0:~$ xr-vm_node0_RP0_CPU0:~$ ip route default dev fwdintf scope link src 10.0.2.15 10.0.2.0/24 dev Mg0_RP0_CPU0_0 proto kernel scope link src 10.0.2.15 xr-vm_node0_RP0_CPU0:~$ xr-vm_node0_RP0_CPU0:~$However if we configure

loopback 1in XR, a new route appears in the XR linux environment:RP/0/RP0/CPU0:rtr1# RP/0/RP0/CPU0:rtr1#conf t Sun Jul 17 11:59:33.014 UTC RP/0/RP0/CPU0:rtr1(config)# RP/0/RP0/CPU0:rtr1(config)#int loopback 1 RP/0/RP0/CPU0:rtr1(config-if)#ip addr 6.6.6.6/32 RP/0/RP0/CPU0:rtr1(config-if)#commit Sun Jul 17 11:59:49.970 UTC RP/0/RP0/CPU0:rtr1(config-if)# RP/0/RP0/CPU0:rtr1# RP/0/RP0/CPU0:rtr1#bash Sun Jul 17 11:59:58.941 UTC [xr-vm_node0_RP0_CPU0:~]$ [xr-vm_node0_RP0_CPU0:~]$ip route default dev fwdintf scope link src 10.0.2.15 6.6.6.6 dev fwd_ew scope link src 10.0.2.15 10.0.2.0/24 dev Mg0_RP0_CPU0_0 proto kernel scope link src 10.0.2.15 [xr-vm_node0_RP0_CPU0:~]$This is what we call the east-west route. Loopback1 is treated as a special remote interface from the perspective of the XR linux shell. It does not appear in ifconfig like the other interfaces. This way an application sitting inside the global-vrf network namespace can talk to XR on the same box by simply pointing to loopback1.

-

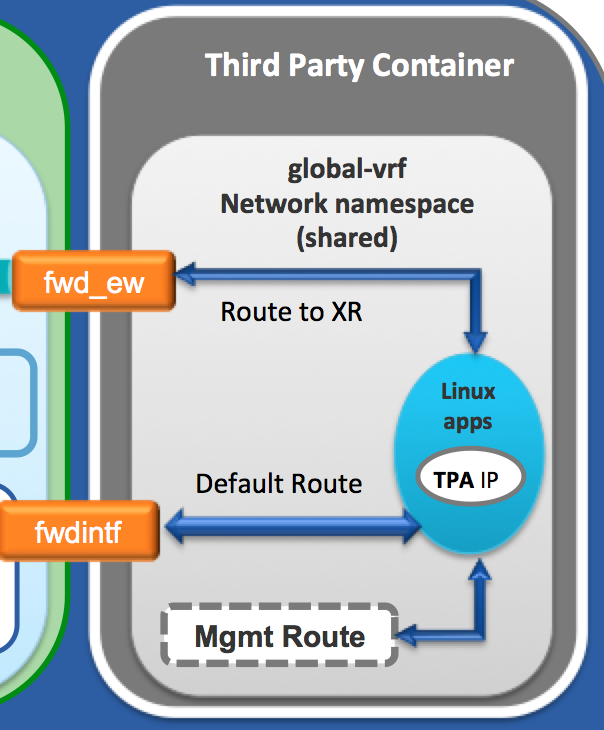

Finally, if you followed the Bring your own Container (LXC) App, you’ll notice that in the XML file meant to launch the lxc, we share the

global-vrfnetwork namespace with the container; specifically, in this section:This makes the architecture work seamlessly for

nativeandcontainerapplications. An LXC app has the same view of the world, the same routes and the same XR interfaces to take advantage of, as any native application with the shared global-vrf namespace. -

You’ll also notice my awkward rendering for a linux app:

Notice the

TPA IP? This stands for Third Party App IP address.The purpose of the TPA IP is simple. Set a src-hint for linux applications, so that originating traffic from the applications (native or LXC) could be tied to the loopback IP or any reachable IP of XR.

This approach mimics how routing protocols like to identify routers in complex topologies: through router-IDs. With the TPA IP, application traffic can be consumed, for example, across an OSPF topology just by relying on XR’s capability to distribute the loopback IP address selected as the src-hint.

We go into further detail here: Set the src-hint for Application traffic

That pretty much wraps it up. Remember, XR handles the routing and applications use only a subset of the routing table to piggy-back on XR for reachability!

Leave a Comment